I love my D365 email notifications

said nobody

Recently I spoke at a conference with Dynamics users, and I asked the group of about 50 people who liked their CRM email notifications. Nobody raised their hand.

There are several problems with traditional workflow based email notifications:

- Everybody gets too many emails.

- Blanket workflow based emails will include some notifications that I don’t care about.

- System generated email messages that I can’t control diminish my sense of control.

- Users, getting overwhelmed with too many notifications, will ignore or delete them

Alternative options

Fortunately, there are better ways to notify in Dynamics 365. One option is don’t notify and teach your users how to use views and dashboards to see records that they need to be aware of. You can also use activity feeds and set up automatic posts via workflow.

Another approach (currently in preview) is to use relationship assistant and use Flow to create new relationship assistant cards.

These are all great options, but in this post I’m going to show you an approach that I’ve used that lets the user decide what he or she will be notified about.

Notification Subscription Center

In this approach we give users choice in what they are notified about and the way in which they are notified. Want to try this solution out? Download a copy at the TDG Power Platform Bank.

Create the subscription center CDS entity

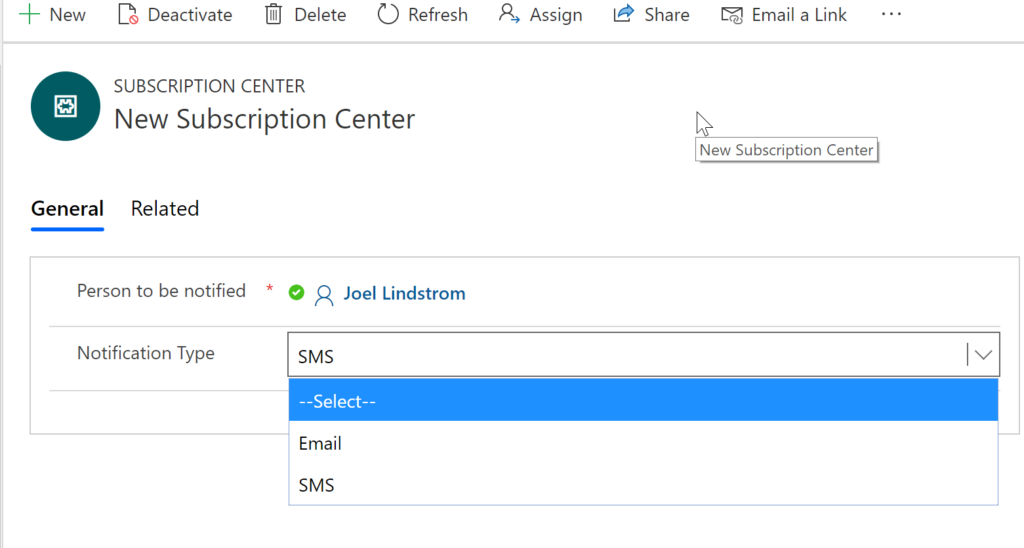

Create a custom entity called Subscription center. You will need the following components:

- Standard owner field (who should be notified)

- Notification type — the way the user will be notified. I chose option set because while initially I just have email and SMS options, in the future I may add additional types of notifications, such as mobile push.

- N:1 relationship with whatever entities you wish to enable notification subscriptions. You do not have to display the lookup fields for these entities on the form if users will be creating notification subscriptions from the parent record.

Now users can “subscribe” to any record for notification and specify what type of notification that they wish to receive.

Creating the Flow

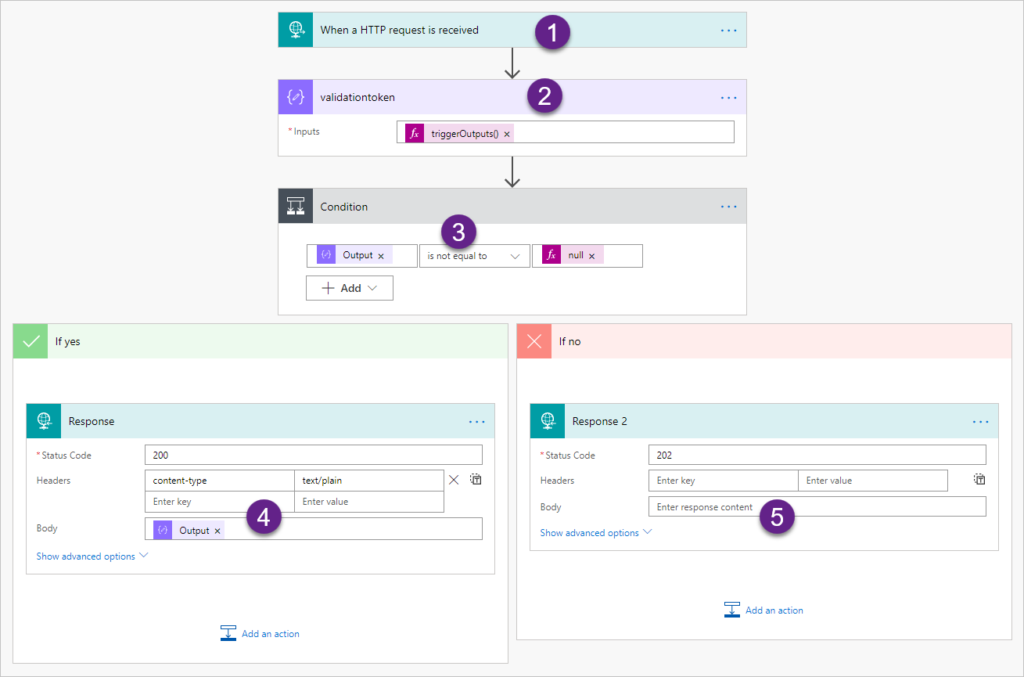

Now when you want to create a notification, such as notify when an opportunity is won, you would create a flow.

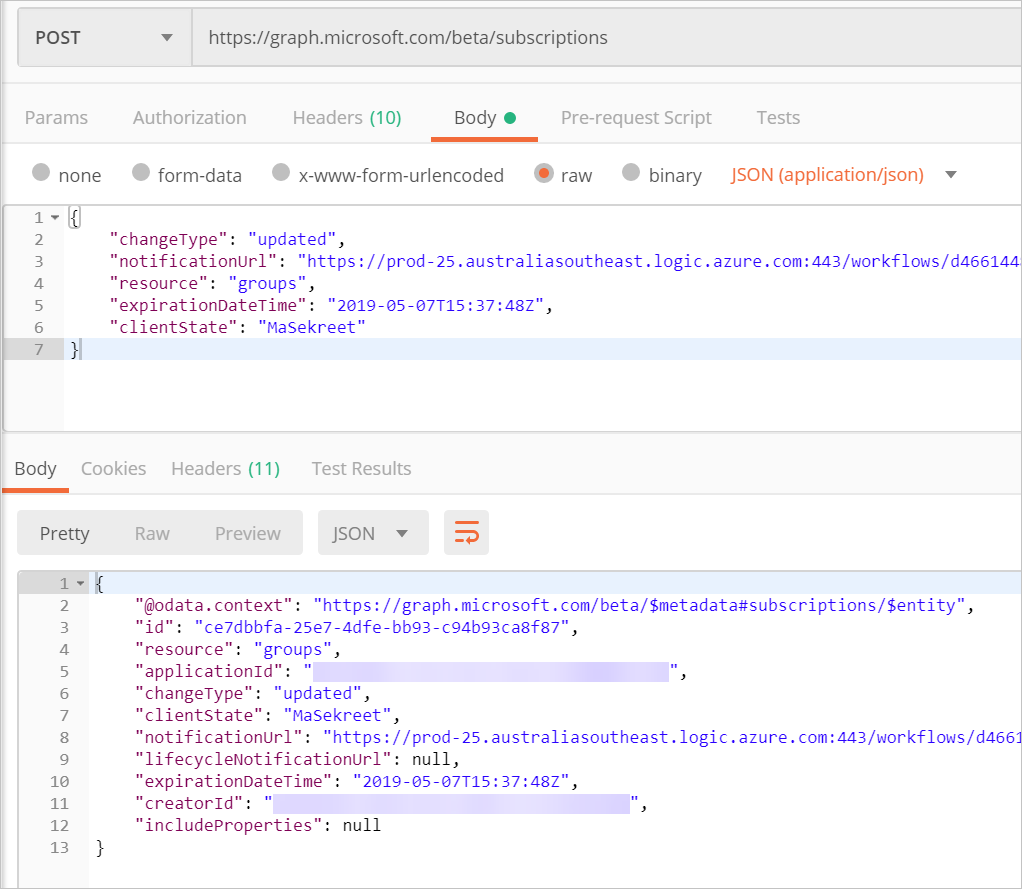

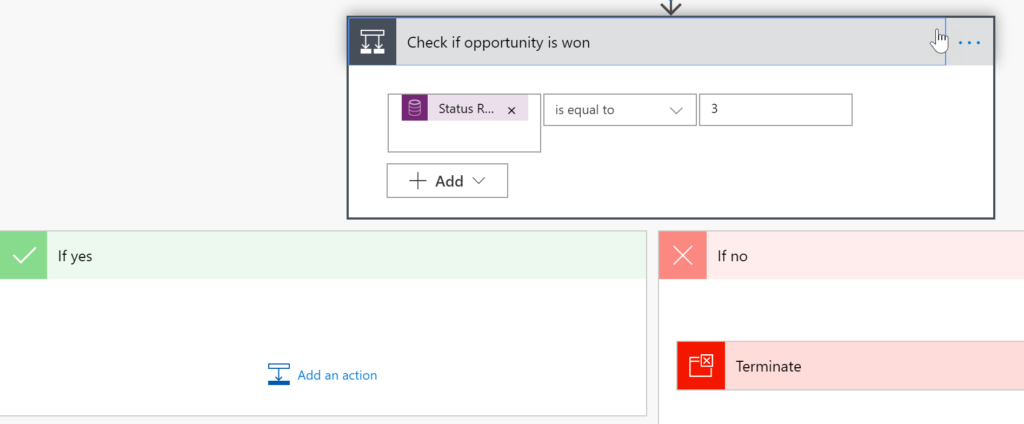

- Create a flow that runs on change of the opportunity status reason field.

- Check to see if opportunity is won.

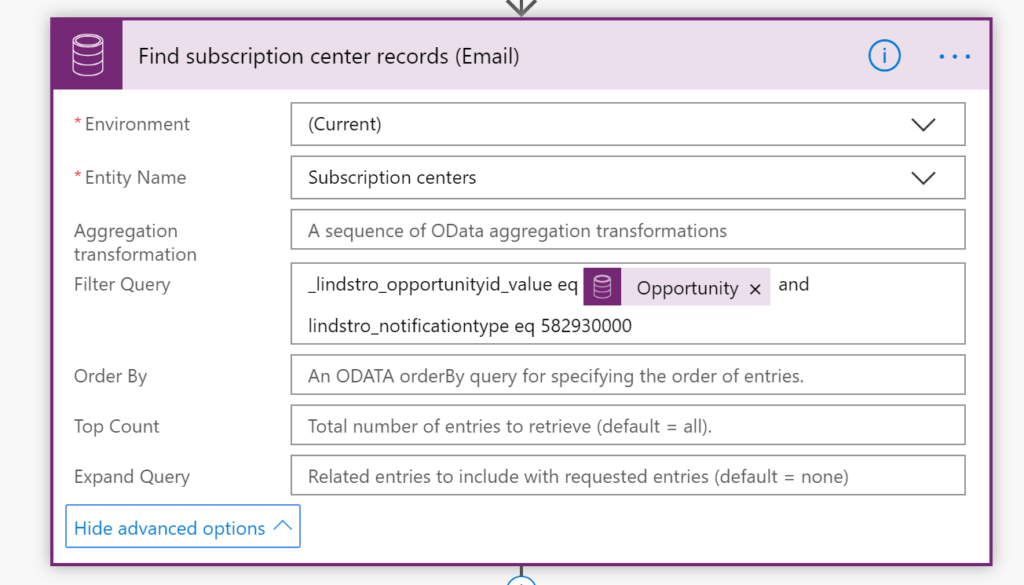

3. List subscription center records related to the opportunity where subscription type = email.

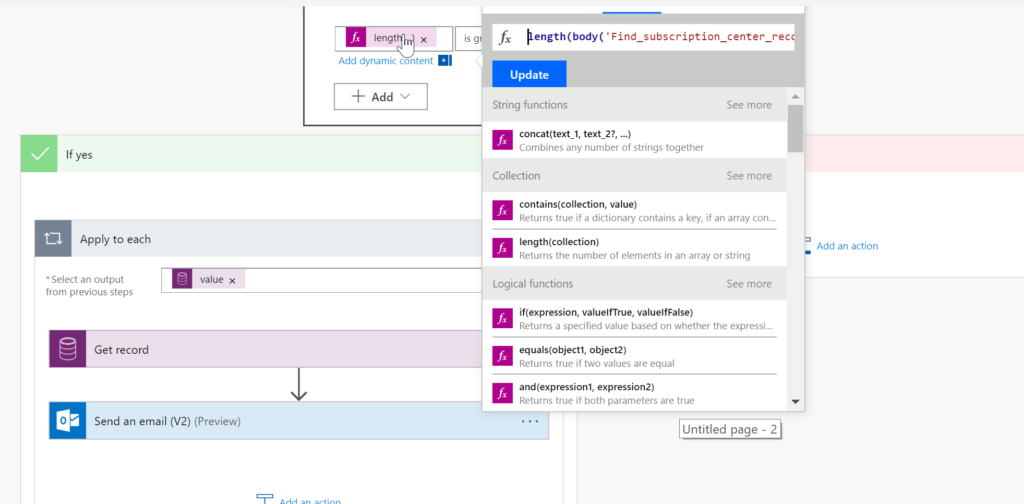

4. List subscription center records related to the opportunity where subscription type equals text.

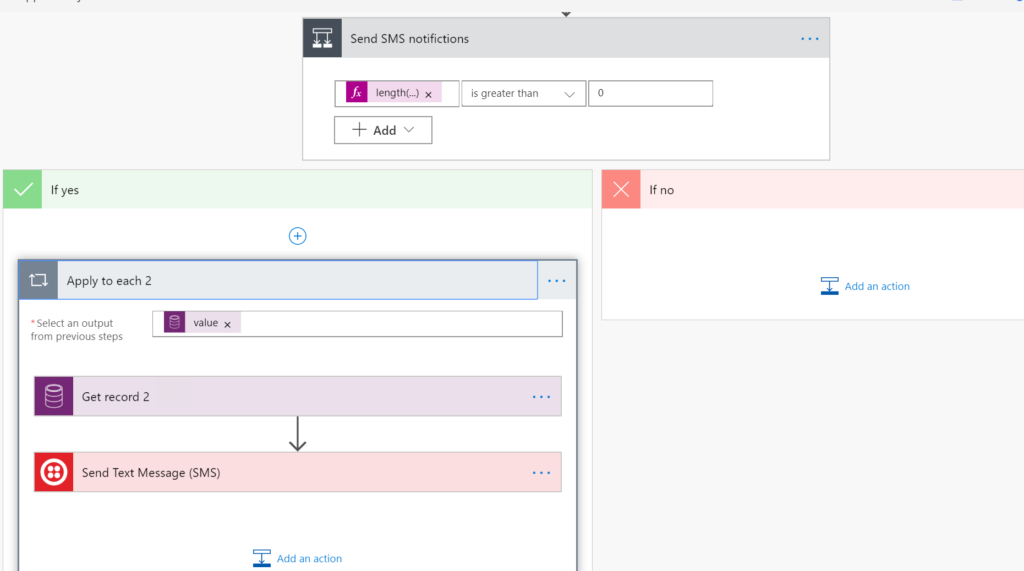

5. Insert a condition step and using the length expression, evaluate if the results of the email list records step has at least one record. If it does, do an apply to each with the value of the email subscription list records step and send an email to them. You will need to get the user record of the owner to get the email address.

6. Do the same thing for the SMS subscribers, sending them a text message via Twilio.

Now users can choose what they get notified about and how they are notified.

Bonus tip

Check out this Flow template to see how to send a weekly digest email of notifications.

Cover image by Daderot [Public domain], via Wikimedia Commons

of the

of the