If you’re like me, then from time to time, you need to mirror your iPhone to a Windows machine to show something cool, usually during a demo. Searching the bottomless pits of the Internet using “How to mirror iPhone to Windows” will get you a never-ending stream of ads and headache-inducing instructions to use LonelyScreen, AirParrot, ApowerMirror, Reflector, or AirServer. ChatGPT suggested one better by recommending an ephemeral “Your Phone” app. Any of these paid apps would be nice if they didn’t depend on the WiFi gods, who like to block ports if a conference name includes the words Dynamics or Power Platform.

Scrap all that.

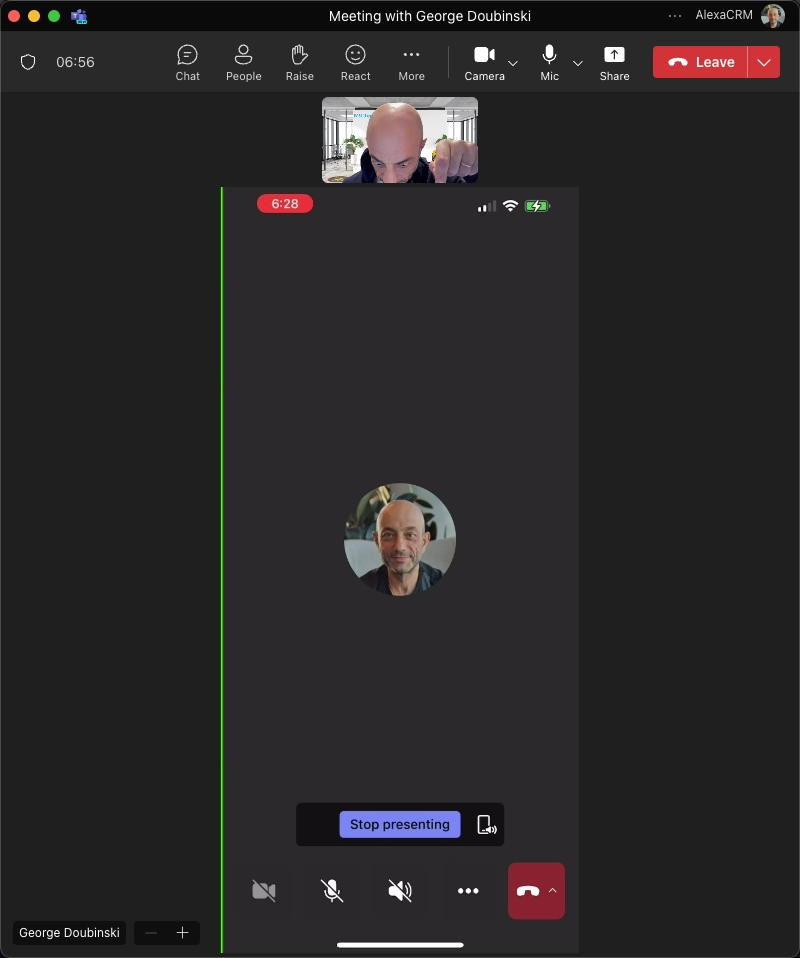

Have Microsoft Teams? Of course, you do.

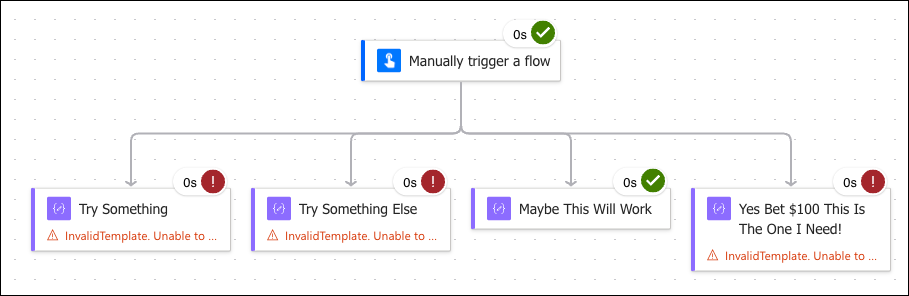

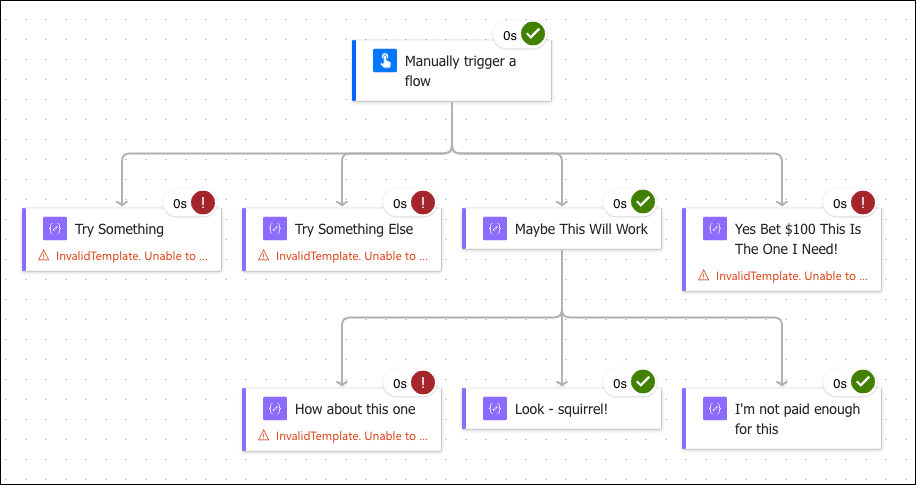

Have a meeting with yourself and share the screen.

The Enabler

That’s it. You heard it here first. And yes, Zoom works too.

of the

of the