It is that time of year when people turn their thoughts to upgrading; the October 18 release has been announced and for those on Dynamics 365 Online v8, the clock has started. I was recently with a client who had a pretty typical setup. They had Dynamics 365 Online, NAV 2016, and an online shopping system. They asked me about the looming upgrade of Dynamics 365 and if they should be concerned about anything.

It is that time of year when people turn their thoughts to upgrading; the October 18 release has been announced and for those on Dynamics 365 Online v8, the clock has started. I was recently with a client who had a pretty typical setup. They had Dynamics 365 Online, NAV 2016, and an online shopping system. They asked me about the looming upgrade of Dynamics 365 and if they should be concerned about anything.

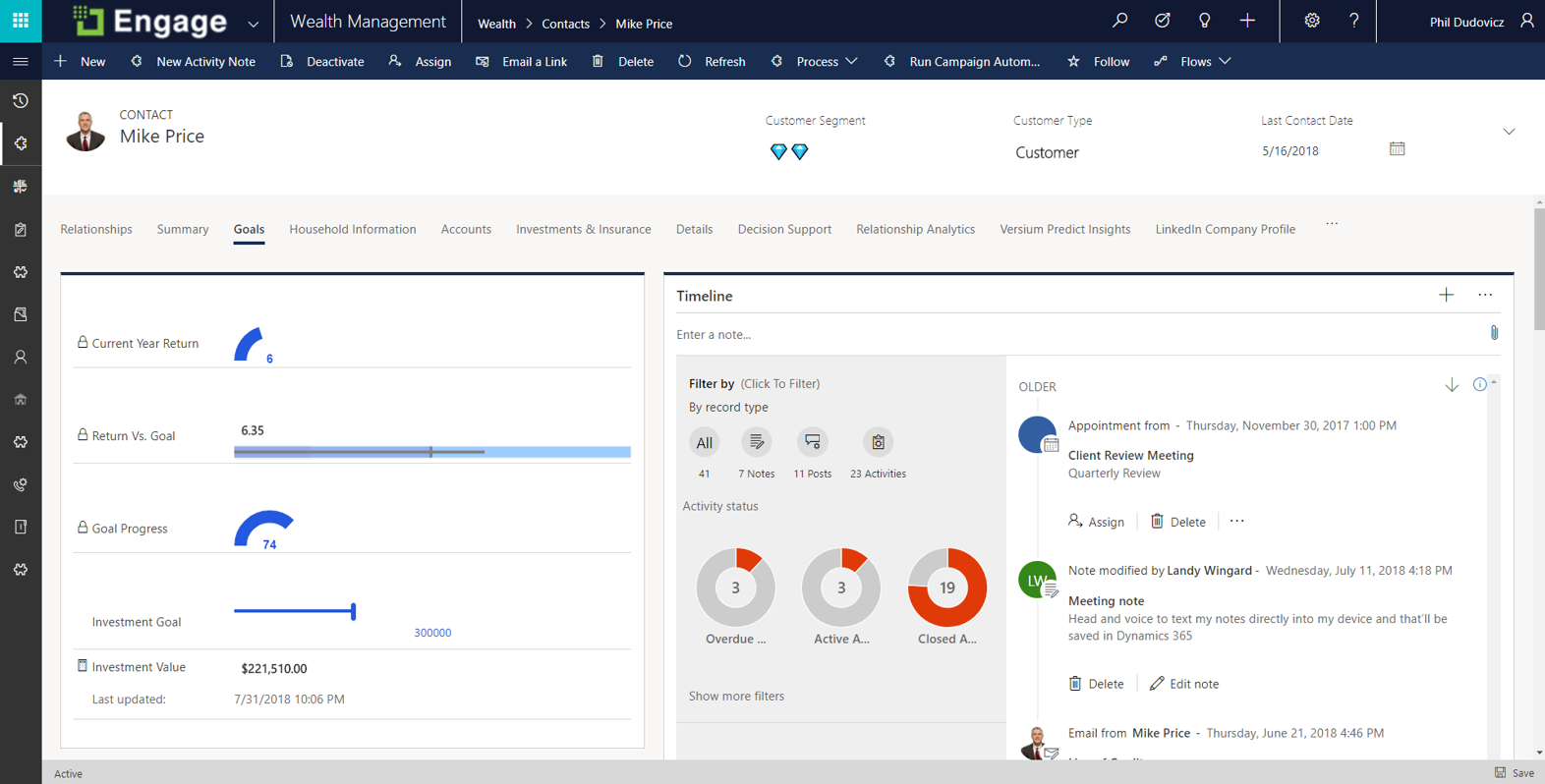

I raised the usual recommendations regarding upgrading Dynamics (backing up, doing it in a non-production environment first etc.) but then we got talking about the rest of the setup. Firstly, they were using the NAV 16 Connector to talk to Dynamics but it was not working 100%. They had custom fields in NAV which never made it to Dynamics and this compromised the reporting in Dynamics, limiting its usefulness to the sales team. Knowing the NAV 16 Connector was not an on-going concern for Microsoft (and that it never handled custom fields well), the obvious choice was to move to Azure ASAP.

So we should upgrade NAV 2016 before the end of the year, right? Well there was another problem. NAV 2016 was connected to the online shopping system via a proprietary integration piece. If NAV 2016 got upgraded this would break and the online shopping system would need to be upgraded. The problem? the estimated cost to upgrade the online shopping system was six figures. Coming up with that in the next few months would be tricky.

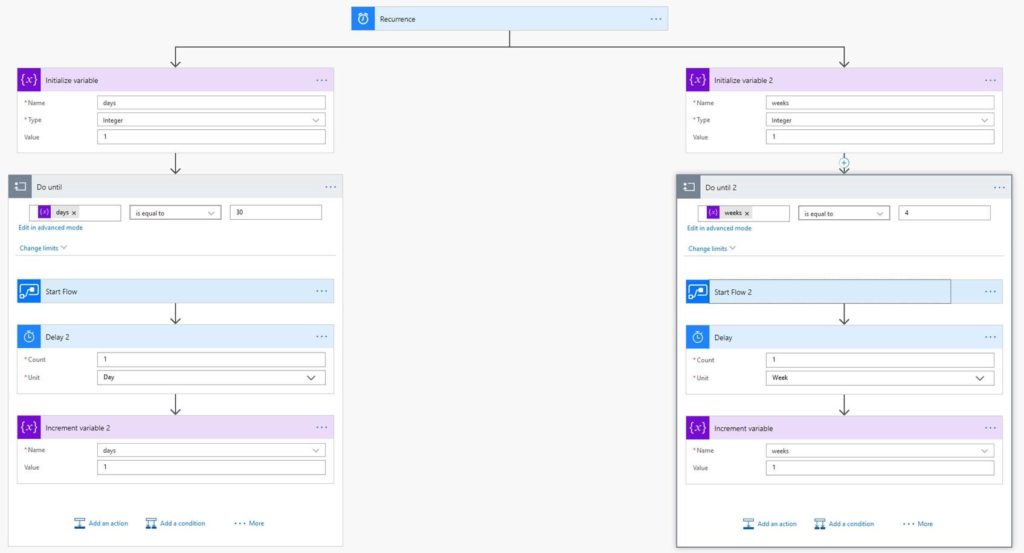

The end solution? Dynamics 365 would go first. This was relatively low risk given the limited customisations and should not affect the other components. Next, rather than upgrade NAV 2016, I recommended they get either a custom NAV 2016 Azure Connector developed to meet their needs or, if this was too difficult, develop a custom integration piece. Initially this could cover the custom fields missing from Dynamics 365 and then, once it proved successful, expand to cover the entities and fields of the incumbent Connector if they wanted to manage it in one place. I warned them if a custom integration piece was developed it would likely be thrown away in the future as they move to using more Azure components but it was a reasonable short term measure until they upgrade the shopping site and NAV 2016.

The moral of the story? Consider the full ecosystem when looking to upgrade and the consequences it might have. Also, while there may be an obvious solution such as getting off of the NAV 16 Connector, consideration of the bigger picture may sometimes require short term compromise, rather than perfection.

of the

of the